The Daily Observer London Desk: Reporter- John Furner

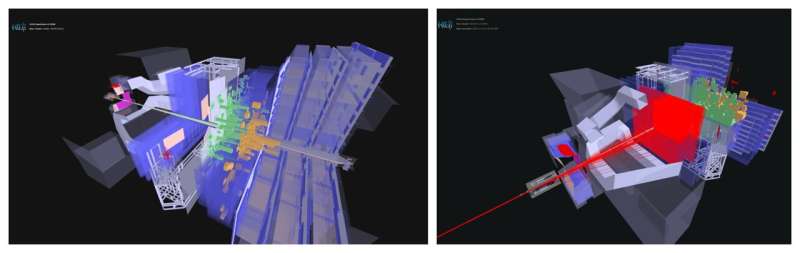

Current technology does not allow all Large Hadron Collider (LHC) proton–proton collision data to be stored and analyzed. It is therefore necessary to filter out the data according to the scientific goals of each experiment. Physicists call this selection process the “trigger.”

Thus, data taking and analysis at the LHC has traditionally been performed in two steps. In the first, which physicists call “online,” the detector records the data, which is then read out by fast electronics and computers, and a selected fraction of the events is stored on disks and magnetic tapes.

Later, the stored events are analyzed “offline.” In offline analysis, important data taken from the online process is used to determine the parameters with which to adjust and calibrate LHCb’s subdetectors. This whole process takes a long time and uses a large amount of human and computing resources.

In order to speed up and simplify this process, the LHCb collaboration has made a revolutionary improvement to data taking and analysis. With a new technique named real-time analysis, the process of adjusting the subdetectors takes place online automatically and the stored data is immediately available offline for physics analysis.

In LHC Run 2, LHCb’s trigger was a combination of fast electronics (“hardware trigger”) and computer algorithms (“software trigger”) and consisted of multiple stages. From the 30 million proton collisions per second (30 MHz) happening in the LHCb detector, the trigger system selected the more interesting collision events, eventually reducing the amount of data to around 150 kHz. Then, a variety of automatic processes used this data to calculate new parameters to adjust and calibrate the detector.

For Run 3 and beyond, the whole trigger system has changed radically: the hardware trigger has been removed and the whole detector is read out at the full LHC bunch-crossing rate of 40 MHz. This allows LHCb to use real-time analysis for the full selection of data, making it much more precise and flexible.

The real-time reconstruction allows LHCb to not only cherry-pick interesting events but also compress the raw detector data in real time. This means there is tremendous flexibility to select both the most interesting events and the most interesting pieces of each event, thus making the best use of LHCb’s computing resources. In the end, around 10 gigabytes of data are permanently recorded each second and made available to physics analysts.

The success of real-time analysis was only possible thanks to the extraordinary work of the online and subdetector teams during the construction and commissioning of this brand new version of the LHCb detector.